John Cummings and Navino Evans

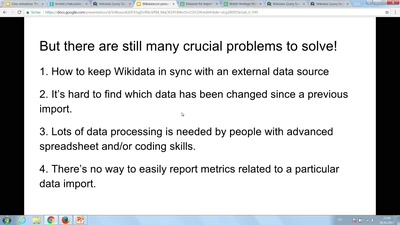

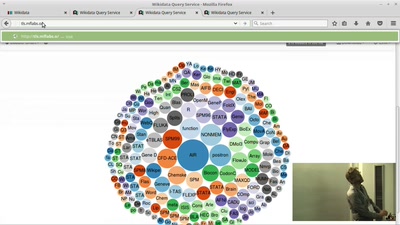

This session is a workshop intended to bring together Wikidata wizards and enthusiasts who can help resolve some of the major pain points in the data import process. For example: It’s very difficult to keep Wikidata ‘in sync’ with an external data source (especially when they have no unique ID system!). There’s no way to easily report metrics related to a particular data import. It’s hard to find which data has been changed since a previous import. Lots of data processing is needed by people with advanced spreadsheet and/or coding skills. It will be framed around a presentation section, where we will share our experience of importing data from UNESCO, and show the data import documentation that has been created as a result. Tool development will obviously play a huge part in solving the problems with data import. Central to our discussion will be where existing tools fit into the process (e.g. QuickStatements, Mix’n’Match, the Wikidata Query Service), and how things may need to change when GLAMPipe is ready to become the hub for data imports. The hope is that we can come away from this session with a plan that will help shape future decisions about the data import process, documentation and related tools.