Human Mapping with Machine Data

Christopher Beddow and Edoardo Neerhut

How useful are map features automatically extracted from street-level images? Can they be trusted? These are some of the questions we tried to answer through community campaigns and student-led research in 2019. We will share some of these lessons and elicit a broader discussion on the methods that can be used to turn automatically extracted features into useful OpenStreetMap data.

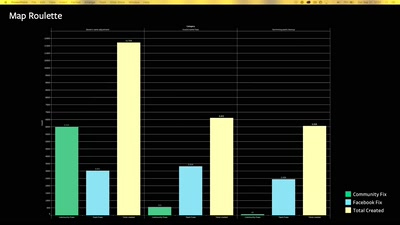

In early 2019, Mapillary began generating point data representing map features that have been recognized and extracted from images, including benches, fire hydrants, bike racks, and post boxes. We worked with various Mapillary communities and users to test the value of this new form of data. In this presentation, we’ll explore two campaigns. The first was the ***#mapillary2osm*** campaign which made point data available to six communities (Antwerp, Austin, Ballerup, Kyiv, Melbourne, São Paulo) in GeoJSON files and then encouraged map edits to turn each point into nodes on OpenStreetMap. Each location was given a 25 km^2 focus area and encouraged to add these points with the hashtag ***#mapillary2osm***, with the results being shared on a public leaderboard.

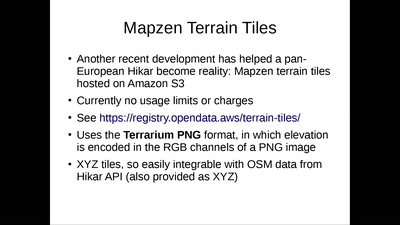

The second was a project led by undergraduate students from the University of Washington’s Department of Geography. This particular project focused on a suburb of Portland, Oregon with the goal of validating and verifying Mapillary data in order to enrich OpenStreetMap. This involved measuring the difference between the OpenStreetMap data before and after the project and augmenting with Mapillary point data.

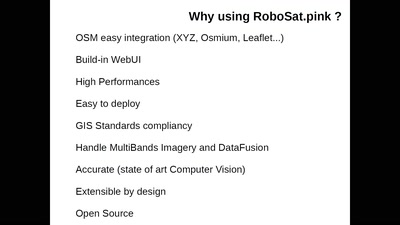

We’ll conclude by looking at the longer term possibilities of editing OpenStreetMap with data derived using computer vision.